- English

- 日本語

Creating and customizing a robots.txt file

Last updated 2018-08-16

The robots.txt file tells web robots how to crawl webpages on your website. You can use Fastly's web interface to create and configure a robots.txt file. If you follow the instructions in this guide, Fastly will serve the robots.txt file from cache so the requests won't hit your origin.

Creating a robots.txt file

To create and configure your robots.txt file, follow the steps below:

- Log in to the Fastly web interface.

- From the Home page, select the appropriate service. You can use the search box to search by ID, name, or domain. You can also click Compute services or CDN services to access a list of services by type.

- Click Edit configuration and then select the option to clone the active version.

- Click Content.

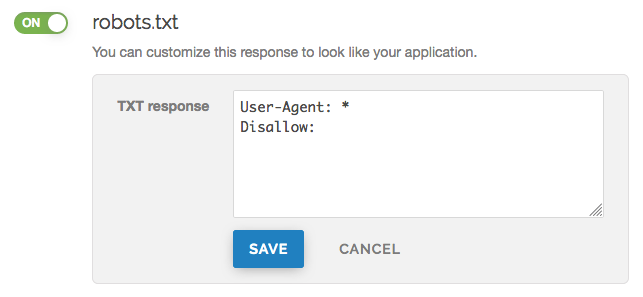

Click the robots.txt switch to enable the robots.txt response.

In the TXT Response field, customize the response for the robots.txt file.

Click Save to save the response.

- Click Activate to deploy your configuration changes.

Manually creating and customizing a robots.txt file

If you need to customize the robots.txt response, you can follow the steps below to manually create the synthetic response and condition:

- Log in to the Fastly web interface.

- From the Home page, select the appropriate service. You can use the search box to search by ID, name, or domain. You can also click Compute services or CDN services to access a list of services by type.

- Click Edit configuration and then select the option to clone the active version.

- Click Content.

Click Set up advanced response.

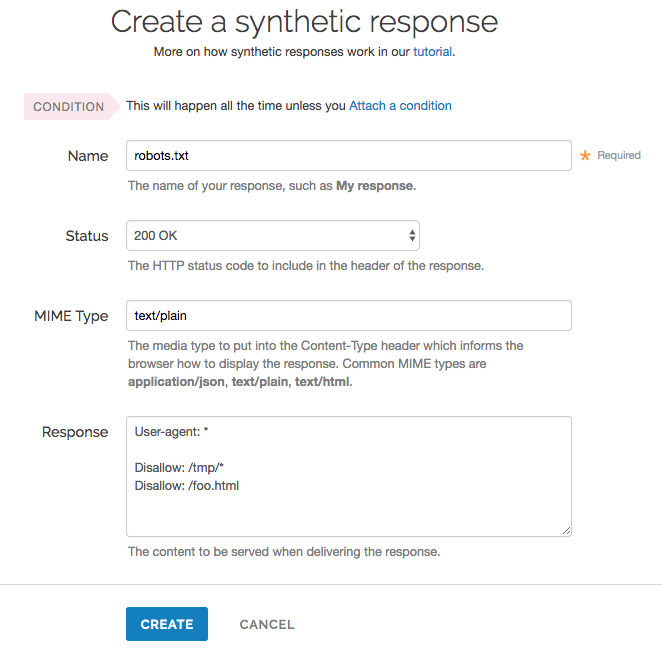

Fill out the Create a synthetic response fields as follows:

- In the Name field, enter an appropriate name. For example

robots.txt. - Leave the Status menu set at its default

200 OK. - In the MIME Type field, enter

text/plain. - In the Response field, enter at least one User-agent string and one Disallow string. For instance, the above example tells all user agents (via the

User-agent: *string) they are not allowed to crawl anything after/tmp/directory or the/foo.htmlfile (via theDisallow: /tmp/*andDisallow: /foo.htmlstrings respectively).

- In the Name field, enter an appropriate name. For example

Click Create.

Click the Attach a condition link to the right of the newly created response.

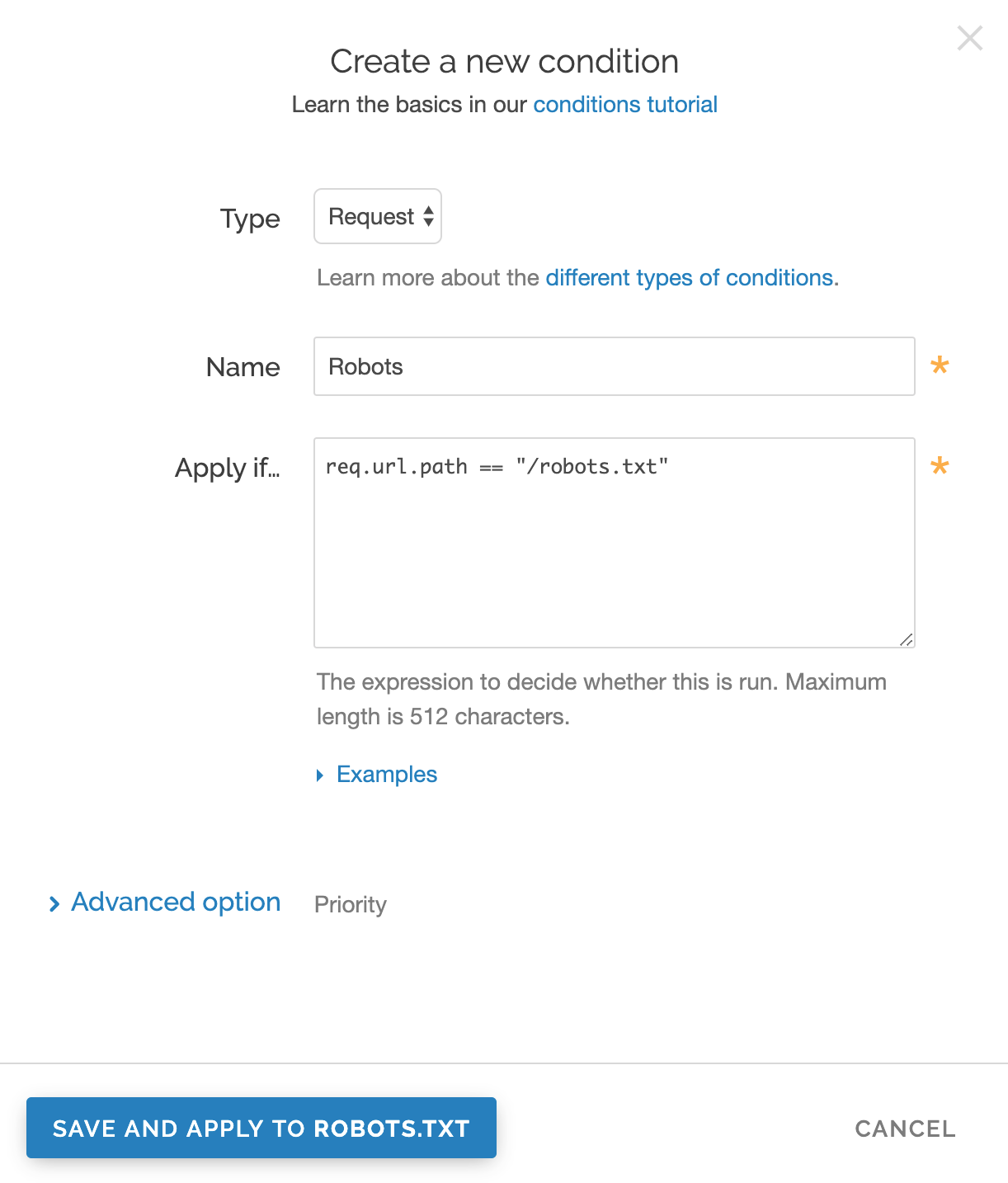

Fill out the Create a condition fields as follows:

- From the Type menu, select the desired condition (for example,

Request). - In the Name field, enter a meaningful name for your condition (e.g.,

Robots). - In the Apply if field, enter the logical expression to execute in VCL to determine if the condition resolves as true or false. In this case, the logical expression would be the location of your robots.txt file (e.g.,

req.url.path == "/robots.txt").

- From the Type menu, select the desired condition (for example,

Click Save.

- Click Activate to deploy your configuration changes.

NOTE

For an in-depth explanation of creating custom responses, check out our Responses Tutorial.

Why can't I customize my robots.txt file with global.prod.fastly.net?

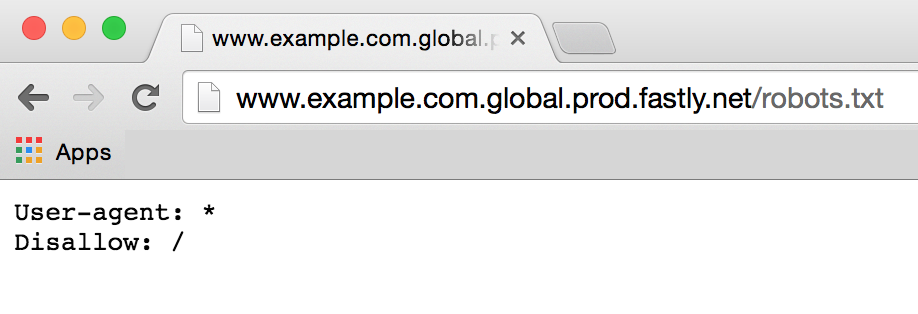

Adding the .global.prod.fastly.net extension to your domain (for example, www.example.com.global.prod.fastly.net) via the browser or in a curl command can be used to test how your production site will perform using Fastly's services.

To prevent Google from accidentally crawling this test URL, we provide an internal robots.txt file that instructs Google's webcrawlers to ignore all pages for all hostnames that end in .prod.fastly.net.

This internal robots.txt file cannot be customized via the Fastly web interface until after you have set the CNAME DNS record for your domain to point to global.prod.fastly.net.

Do not use this form to send sensitive information. If you need assistance, contact support. This form is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.