- English

- 日本語

Serving stale content

Last updated 2024-10-07

Fastly can optionally serve stale content when there is a problem with your origin server or if new content is taking a long time to fetch from your origin server. For example, if Fastly can't contact your origin server, our POPs will continue to serve cached content when users request it. These features are not enabled by default.

TIP

For more information on serving stale content, see our documentation on staleness and revalidation.

Serving old content while fetching new content

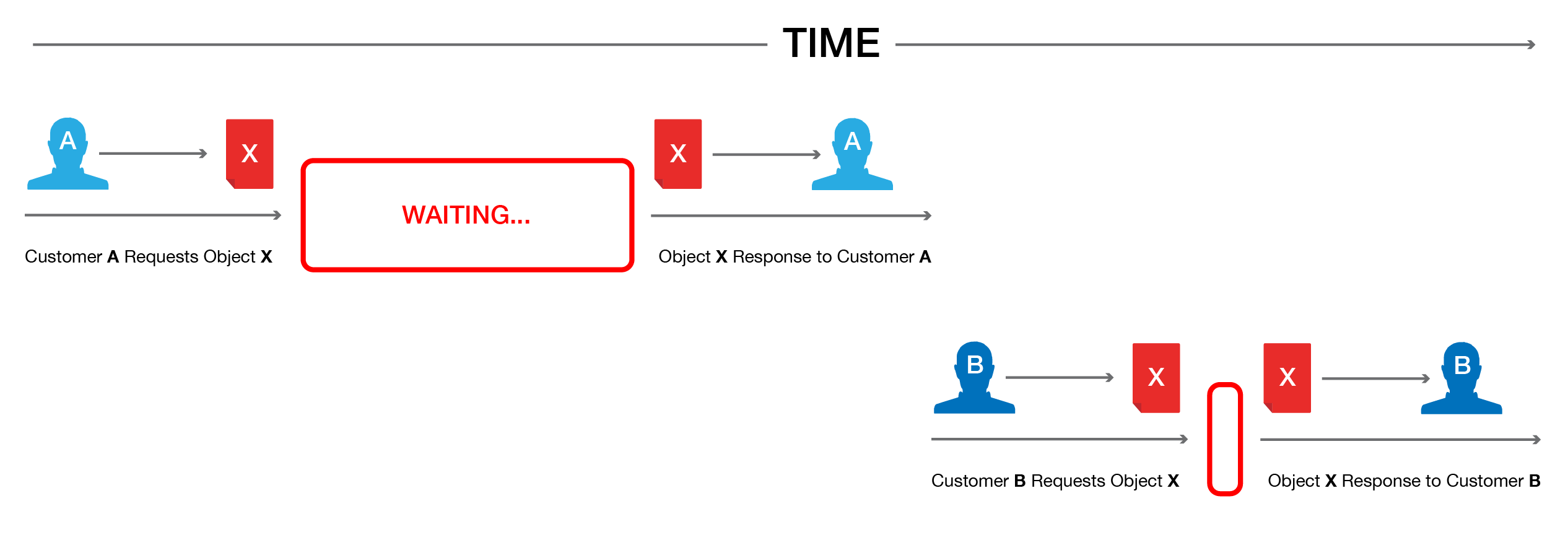

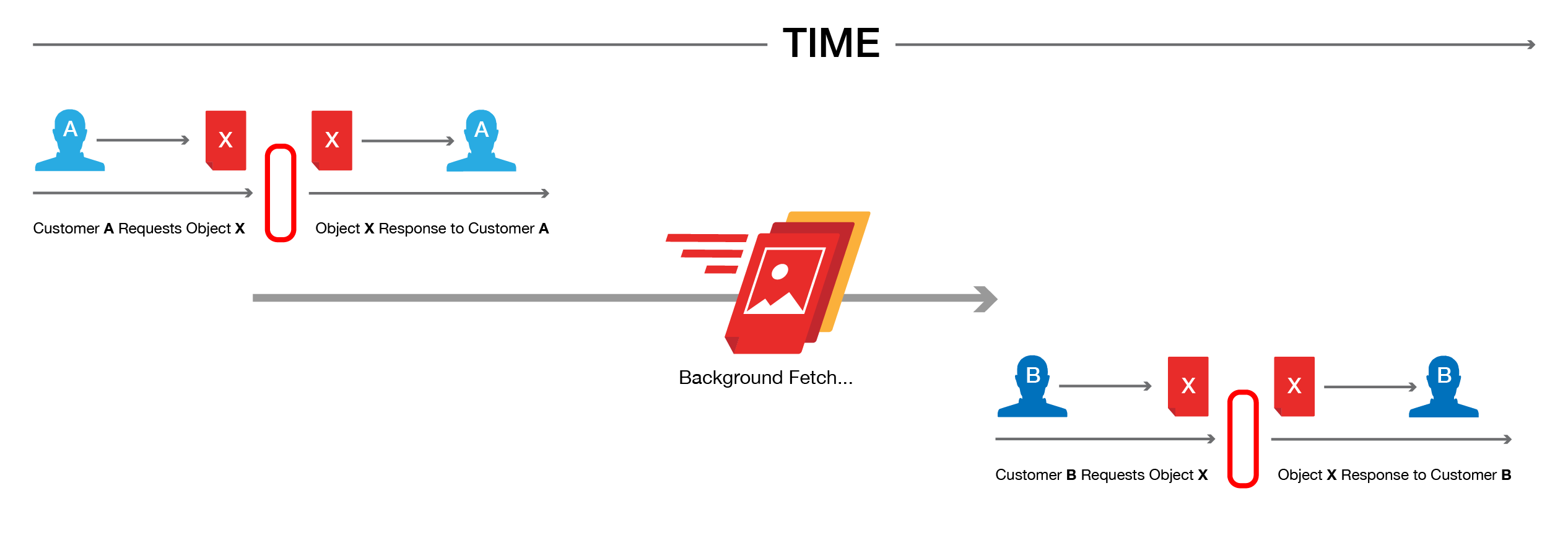

Certain pieces of content can take a long time to generate. Once the content is cached it will be served quickly, but the first user to try and access it will pay a penalty.

This is unavoidable if the cache is completely cold, but if this is happening when the object is in cache and its TTL is expired, then Fastly can be configured to show the stale content while the new content is fetched in the background.

Fastly builds on the behavior proposed in RFC 5861 "HTTP Cache-Control Extensions for Stale Content" by Mark Nottingham, which is under consideration for inclusion in Google's Chrome browser.

Enabling serve stale

NOTE

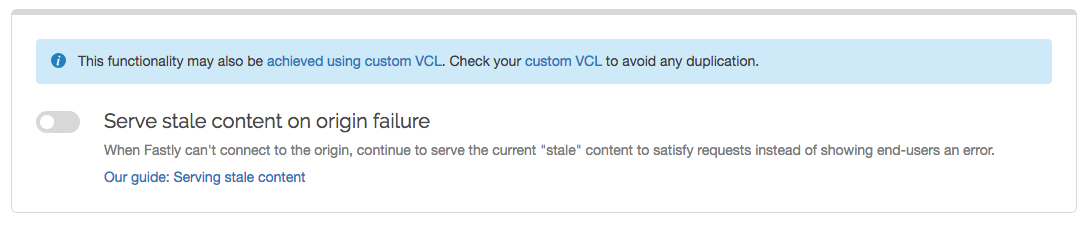

If you've already enabled serving stale content on an error via custom VCL, adding this feature via the Fastly control panel will set a different stale TTL. To avoid this, check your custom VCL and remove the stale-if-error statement before enabling this feature via the Fastly control panel.

To enable serving stale content on an error via the Fastly control panel for the default TTL period (43200 seconds or 12 hours), follow the steps below.

- Log in to the Fastly control panel.

- From the Home page, select the appropriate service. You can use the search box to search by ID, name, or domain.

- Click Edit configuration and then select the option to clone the active version.

- Click Settings.

Click the Serve stale switch to automatically enable serving stale content for the default TTL period of 43200 seconds (12 hours).

- Click Activate to deploy your configuration changes.

Manually enabling serve stale

These instructions provide an advanced configuration that allows all three possible origin failure cases to be handled using VCL.

In the context of Varnish, there are three ways an origin can fail:

- The origin can be marked as unhealthy by failing health checks.

- If Varnish cannot contact the origin for any reason, a 503 error will be generated.

- The origin returns a valid HTTP response, but that response is not one we wish to serve to users (for instance, a 503).

The custom VCL shown below handles all three cases. If the origin is unhealthy, the default serve stale behavior is triggered by stale-if-error. In between the origin failing and being marked unhealthy, Varnish would normally return 503s. The custom VCL allows us to instead either serve stale if we have a stale copy, or to return a synthetic error page. The error page can be customized. The third case is handled by intercepting all 5XX errors in vcl_fetch and either serving stale or serving the synthetic error page.

WARNING

Do not purge all cached content if you are seeing 503 errors. Purge all overrides stale-if-error and increases the requests to your origin server, which could result in additional 503 errors.

Although not strictly necessary, health checks should be enabled in conjunction with this VCL. Without health checks enabled, all of the functionality will still work, but serving stale or synthetic responses will take much longer while waiting for an origin to timeout. With health checks enabled, this problem is averted by the origin being marked as unhealthy.

The custom VCL shown below includes the Fastly standard boilerplate. Before uploading this to your service, be sure to customize or remove the following values to suit your specific needs:

if (beresp.status >= 500 && beresp.status < 600)should be changed to include any HTTP response codes you wish to serve stale/synthetic for.set beresp.stale_if_error = 86400s;controls how long content will be eligible to be served stale and should be set to a meaningful amount for your configuration. If you're sendingstale_if_errorin Surrogate-Control or Cache-Control from origin, remove this entire line.set beresp.stale_while_revalidate = 60s;controls how long thestale_while_revalidatefeature will be enabled for an object and should be set to a meaningful amount for your configuration. This feature causes Varnish to serve stale on a cache miss and fetch the newest version of the object from origin in the background. This can result in large performance gains on objects with short TTLs, and in general on any cache miss. Note thatstale_while_revalidateoverridesstale_if_error. That is, as long as the object is eligible to be served stale while revalidating,stale_if_errorwill have no effect. If you're sendingstale_while_revalidatein Surrogate-Control or Cache-Control from origin, remove this entire line.synthetic {"<!DOCTYPE html>Your HTML!</html>"};is the synthetic response Varnish will return if no stale version of an object is available and should be set appropriately for your configuration. You can embed your HTML, CSS, or JS here. Use caution when referencing external CSS and JS documents. If your origin is offline they may be unavailable as well.

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125sub vcl_recv {#FASTLY recv

# Normally, you should consider requests other than GET and HEAD to be uncacheable # (to this we add the special FASTLYPURGE method) if (req.method != "HEAD" && req.method != "GET" && req.method != "FASTLYPURGE") { return(pass); }

# If you are using image optimization, insert the code to enable it here # See https://www.fastly.com/documentation/reference/io/ for more information.

return(lookup);}

sub vcl_hash { set req.hash += req.url; set req.hash += req.http.host; #FASTLY hash return(hash);}

sub vcl_hit {#FASTLY hit return(deliver);}

sub vcl_miss {#FASTLY miss return(fetch);}

sub vcl_pass {#FASTLY pass return(pass);}

sub vcl_fetch { /* handle 5XX (or any other unwanted status code) */ if (beresp.status >= 500 && beresp.status < 600) {

/* deliver stale if the object is available */ if (stale.exists) { return(deliver_stale); }

if (req.restarts < 1 && (req.method == "GET" || req.method == "HEAD")) { restart; } }

/* set stale_if_error and stale_while_revalidate (customize these values) */ set beresp.stale_if_error = 86400s; set beresp.stale_while_revalidate = 60s;

#FASTLY fetch

# Unset headers that reduce cacheability for images processed using the Fastly image optimizer if (req.http.X-Fastly-Imageopto-Api) { unset beresp.http.Set-Cookie; unset beresp.http.Vary; }

# Log the number of restarts for debugging purposes if (req.restarts > 0) { set beresp.http.Fastly-Restarts = req.restarts; }

# If the response is setting a cookie, make sure it is not cached if (beresp.http.Set-Cookie) { return(pass); }

# By default we set a TTL based on the `Cache-Control` header but we don't parse additional directives # like `private` and `no-store`. Private in particular should be respected at the edge: if (beresp.http.Cache-Control ~ "(?:private|no-store)") { return(pass); }

# If no TTL has been provided in the response headers, set a default if (!beresp.http.Expires && !beresp.http.Surrogate-Control ~ "max-age" && !beresp.http.Cache-Control ~ "(?:s-maxage|max-age)") { set beresp.ttl = 3600s;

# Apply a longer default TTL for images processed using Image Optimizer if (req.http.X-Fastly-Imageopto-Api) { set beresp.ttl = 2592000s; # 30 days set beresp.http.Cache-Control = "max-age=2592000, public"; } }

return(deliver);}

sub vcl_error {#FASTLY error

/* handle 503s */ if (obj.status >= 500 && obj.status < 600) {

/* deliver stale object if it is available */ if (stale.exists) { return(deliver_stale); }

/* otherwise, return a synthetic */

/* include your HTML response here */ synthetic {"<!DOCTYPE html><html>Replace this text with the error page you would like to serve to clients if your origin is offline.</html>"}; return(deliver); }

}

sub vcl_deliver {#FASTLY deliver return(deliver);}

sub vcl_log {#FASTLY log}Adding headers

After you add the custom VCL, you can finish manually enabling serving stale content by adding a stale-while-revalidate or stale-if-error directive to either the Cache-Control or Surrogate-Control headers in the response from your origin server. For example:

Cache-Control: max-age=600, stale-while-revalidate=30will cache some content for 10 minutes and, at the end of that 10 minutes, will serve stale content for up to 30 seconds while new content is being fetched.

Similarly, this statement:

Surrogate-Control: max-age=3600, stale-if-error=86400instructs the cache to update the content every hour (3600 seconds) but if the origin is down then show stale content for a day (86400 seconds).

Alternatively, these behaviors can be controlled from within VCL by setting the following variables in vcl_fetch:

set beresp.stale_while_revalidate = 30s;set beresp.stale_if_error = 86400s;Interaction with grace

Stale-if-error works exactly the same as Varnish's grace variable such that these two statements are equivalent:

set beresp.grace = 86400s;set beresp.stale_if_error = 86400s;However, if a grace statement is present in VCL it will override any stale-if-error statements in any Cache-Control or Surrogate-Control response headers.

Why serving stale content may not work as expected

Here are some things to consider if Fastly isn't serving stale content:

- Cache: Stale objects are only available for cacheable content.

- VCL: Setting

req.hash_always_missorreq.hash_ignore_busyvariable totrueinvalidates the effect ofstale-while-revalidate. - Shielding: If you don't have shielding enabled, a POP can only serve stale on errors if a request for that cacheable object was made through that POP before. We recommend enabling shielding to increase the probability that stale content on error exists. Shielding is also a good way to quickly refill the cache after performing a purge all.

- Requests: As traffic to your site increases, you're more likely to see stale objects available (even if shielding is disabled). It's reasonable to assume that popular assets will be cached at multiple POPs.

- Least Recently Used (LRU): Fastly has an LRU list, so objects are not necessarily guaranteed to stay in cache for the entirety of their TTL (time to live). But eviction is dependent on many factors, including the object's request frequency, its TTL, the POP from which it's being served. For instance, objects with a TTL of 3700s or longer get written to disk, whereas objects with shorter TTLs end up in transient, in-memory-only storage. We recommend setting your TTL to more than 3700s when possible.

- Purges: Whenever possible, you should purge content using our soft purge feature. Soft purge allows you to easily mark content as outdated (stale) instead of permanently deleting it from Fastly's caches. If you can't use soft purge, we recommend purging by URL or using surrogate keys instead of performing a purge all.

Do not use this form to send sensitive information. If you need assistance, contact support. This form is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.