- English

- 日本語

Shielding

Last updated 2022-11-14

As a content delivery network, Fastly works by having any one of our global points of presence (POP) respond to requests that would otherwise be sent directly to your origin server. Because each POP acts independently, any time it doesn't have a cached version of the requested resource, it will make a request directly to your origin, even if another POP may be in the process of making the same request. You can reduce the load on your origin servers if you specify one of Fastly's POPs as an origin "shield." Designating a shield POP ensures requests to your origin will come from a single POP, thereby increasing the chances of an end user request resulting in a cache HIT.

For example, consider end users in three regions: Lyon (France), Washington DC (United States), and Tokyo (Japan). Each of these regions has a local Fastly POP available to them that caches information by default. If the user in Lyon requests a resource that is not cached on the Fastly Paris POP, however, that POP will make a request to the origin server, cache the resources in the response, and return the cached resource to the user.

With shielding enabled, however, the POP you designate collects all requests to your origin server instead. For example, the Fastly Virginia POP was designated as the shield for a server located in the AWS us-east-1 region (as show in the illustration below).

In this scenario, if the user in Lyon requests a resource that isn't cached on the Fastly Paris POP, then that POP will forward the request to the shield POP, in this case in Virginia. If the resource had been previously cached on the shield POP, then it would be returned to the regional POP where it is then cached and returned to the user. Otherwise, the shield POP would make a request to the origin server for the resource, cache the response and return it to the regional POP where it is also cached before being returned to the user.

For more advanced use cases, see Advanced shielding scenarios.

Enabling shielding

IMPORTANT

If you are using Google Cloud Storage as your origin, you need to follow the steps in our GCS setup guide instead of the steps below.

Enable shielding with these steps:

Read the caveats of shielding information below for details about the implications of and potential pitfalls involved with enabling shielding for your organization.

- Log in to the Fastly web interface.

- From the Home page, select the appropriate service. You can use the search box to search by ID, name, or domain. You can also click Compute services or CDN services to access a list of services by type.

- Click Edit configuration and then select the option to clone the active version.

- Click Origins.

Click the name of the Host you want to edit.

From the Shielding menu, select the data center to use as your shield keeping the following in mind:

- Generally, we recommend selecting a data center close to your backend. Doing this allows faster content delivery because we optimize requests between the shield POP you're selecting (the one close to your server) and the edge POP (the one close to the user making the request). Read our guidance on choosing a shield location for more information.

- With multiple backends, each backend will have its own shield defined. This allows flexibility if your company has backends selected geographically and different shield POPs are desired.

Click Update to save your changes.

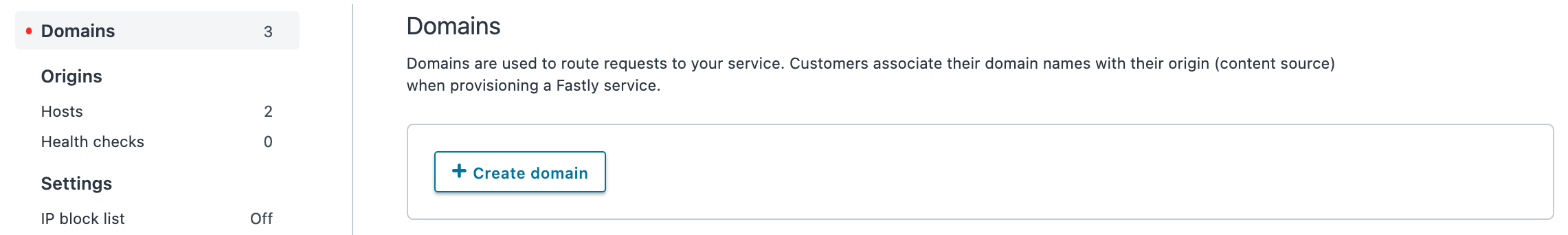

If you have changed the default Host or have added a header to change the Host, add the modified hostname to your list of domains. Do this by clicking Domains and checking to make sure the Host in question appears on the page. If it isn't included, add it by clicking Create domain.

With shielding enabled, queries from other POPs appear as incoming requests to the shield. If the shield doesn't know about the modified hostname, it doesn't know which service to match the request to. Including the origin's hostname in the domain list eliminates this concern.

- Click Activate to deploy your configuration changes.

Caveats of shielding

Shielding not only impacts traffic and hit ratios, it affects configuration and performance. When you configure shielding, be aware of the following caveats.

Inbound traffic billing

Inbound traffic to a shield will be billed as regular traffic, including requests to populate remote POPs. Enabling shielding will incur some additional Fastly bandwidth charges, but those are likely to be offset by savings of your origin bandwidth (and origin server load). Pass-through requests will not go directly to the origin, they will go through the shield first.

Global HIT ratio calculation

Global HIT ratio calculation may seem lower than the actual numbers. Shielding is not taken into account when calculating the global hit ratio. If an edge node doesn't have an object in its cache, it reports a miss. Local MISS/Shield HIT gets reported as a miss and a hit in the statistics, even though there is no call to the backend. It will also result in one request from the edge node to the shield. Local MISS/Shield MISS will result in two requests, because we will subsequently fetch the resource from your origin. For more information about caching with shielding, see our shielding developer documentation.

Shield failover

If a specified shield POP is inaccessible for a request (e.g., because of intervening network issues), that request will go directly from the edge node to your origin server, bypassing the shield.

Backends manually defined using VCL

You will be unable to manually define backends using VCL. Shielding at this level is completely dependent on backends being defined as actual objects through the web interface or API. Other custom VCL will work just fine.

Automatic load balancing

If you've selected auto load balancing, you can only select one shield total. You must use custom VCL to use multiple shields when auto load balancing is set.

Sticky load balancing

Enabling sticky load balancing and shielding at the same time requires custom VCL. Sticky load balancers use client.identity to choose where to send the session. The client.identity defaults to the IP request header. That's fine under normal circumstances, but if you enable shielding, the IP will be the original POP's IP, not the client's IP. Thus, to enable shielding and a custom sticky load balancer, you want to use the following:

1if (req.http.fastly-ff) {2 set client.identity = req.http.Fastly-Client-IP;3}Host header

You'll need to use caution when changing the Host header before it reaches the shield. Fastly matches a request with a Host header. If the Host header doesn't match to a domain within the service, an error of 500 is expected. To ensure consistent behavior of Fastly customer services and origins, we normalize the host header’s value to all lowercase in the vcl_hash subroutine. This means that no matter how your site’s domain name is capitalized in the request, the hash subroutine will behave predictably. This does not apply to any other parts of the URL, which remain case-sensitive.

Purging conflicts can occur if the Host header is changed to a domain that exists in a different service. For example, say Service A has hostname a.example.com and Service B has hostname b.example.com. If Service B changes the Host header to a.example.com, then the edge will think the request is for Service B but the shield will think the request is for Service A. As a precaution, you'll want to purge the object from both Service A and Service B to ensure that Fastly is retrieving the newest version on the next request. If you're changing the Host header before it reaches the shield, the object is split across both services because a.example.com and b.example.com are treated as separate objects. For information about the caveats to be aware of when you change a Host header, see our article on Specifying an override host.

VCL execution

VCL gets executed twice: once on the edge POP and again on the shield POP. Changes to beresp and resp can affect the caching of a URL on the shield and edge. Consider the following examples.

Say you want Fastly to cache an object for one hour (3600 seconds) and then ten seconds on the browser. The origin sends Cache-Control: max-age=3600. You unset beresp.http.Cache-Control and then reset Cache-Control to max-age=10. With shielding enabled, however, the result will not be what you expect. The object will have max-age=3600 on the shield and reach the edge with max-age=10.

A better option in this instance would be to use Surrogate-Control and Cache-Control response headers. Surrogate-Control overrides Cache-Control and is stripped after the edge node. The max-age from Cache-Control will then communicate with the browser. The origin response headers would look like this:

Surrogate-Control: max-age=3600Cache-Control: max-age=10Another common pitfall involves sending the wrong Vary header to an edge POP. For example, there's VCL that takes a specific value from a cookie, puts it in a header, and that header is then added to the Vary header. To maximize compatibility with any caches outside of your control (such as with shared proxies as commonly seen in large enterprises), the Vary header is updated in vcl_deliver, replacing the custom header with Cookie. The code might look like this:

1vcl_recv {2 # Set the custom header3 if (req.http.Cookie ~ "ABtesting=B") {4 set req.http.X-ABtesting = "B";5 } else {6 set req.http.X-ABtesting = "A";7 }8 ...9}10

11...12

13sub vcl_fetch {14 # Vary on the custom header15 if (beresp.http.Vary) {16 set beresp.http.Vary = beresp.http.Vary ", X-ABtesting";17 } else {18 set beresp.http.Vary = "X-ABtesting";19 }20 ...21}22

23...24

25sub vcl_deliver {26 # Hide the existence of the header from downstream27 if (resp.http.Vary) {28 set resp.http.Vary = regsub(resp.http.Vary, "X-ABtesting", "Cookie");29 }30}When combined with shielding, however, the effect of the above code will be that edge POPs will have Cookie in the Vary header, and thus will have a terrible hit rate. To work around this, amend the above VCL so that Vary is only updated with Cookie when the request is not coming from another Fastly cache. The Fastly-FF header is a good way to tell. The code would look something like this (including the same vcl_recv from the above example):

1# Same vcl_recv from above code example2

3sub vcl_fetch {4 # Vary on the custom header, don't add if shield POP already added5 if (beresp.http.Vary !~ "X-ABtesting") {6 if (beresp.http.Vary) {7 set beresp.http.Vary = beresp.http.Vary ", X-ABtesting";8 } else {9 set beresp.http.Vary = "X-ABtesting";10 }11 }12 ...13}14

15...16

17sub vcl_deliver {18 # Hide the existence of the header from downstream19 if (resp.http.Vary && !req.http.Fastly-FF) {20 set resp.http.Vary = regsub(resp.http.Vary, "X-ABtesting", "Cookie");21 }22}POP maintenance

As part of our standard maintenance procedures, Fastly may perform maintenance on a POP that you have designated as an origin shielding location. When this happens, the POP will remain in service, but we may bring individual machines within that POP in or out of service as needed. Fastly may also decommission a POP. When this happens, Fastly will move your shielding location to a neighboring data center on your behalf. Before standard maintenance starts, we will post a notification update to our service status page, but if you prefer to be individually alerted before the maintenance happens, you can open a support ticket by visiting https://support.fastly.com/, letting us know your customer ID, and requesting to be notified of upcoming shielding migrations at locations where you shield. We will then contact you via email to announce upcoming migrations. If necessary, we'll ask you to perform certain actions at a time that's convenient for you.

Do not use this form to send sensitive information. If you need assistance, contact support. This form is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.