- English

- 日本語

Log streaming: Datadog

Last updated 2024-02-16

Fastly's Real-Time Log Streaming feature can be configured to send logs in a format readable by Datadog. Datadog is a cloud-based monitoring and analytics solution that allows you to see inside applications within your stack and aggregate the results.

NOTE

Fastly does not provide direct support for third-party services. Read Fastly's Terms of Service for more information.

Prerequisites

Before adding Datadog as a logging endpoint for Fastly services, you will need to:

Register for a Datadog account. You can sign up for a Datadog account on their site. A free plan exists that has some restrictions or you can upgrade for more features. Where you register your Datadog setup, either in the United States (US) or the European Union (EU), will affect which commands you use during logging endpoint setup at Fastly.

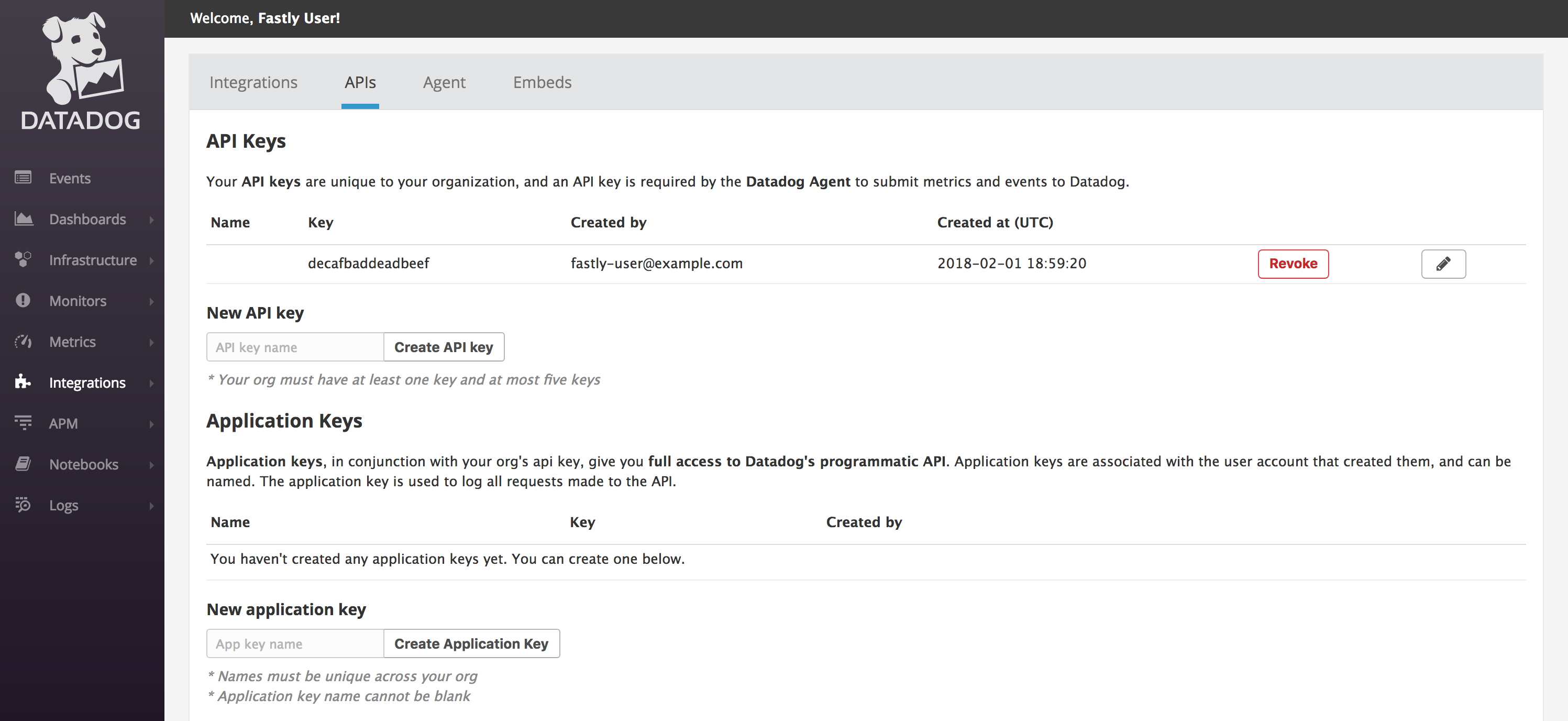

Get your Datadog API key from your settings page on Datadog. In the Datadog interface, navigate to Integrations -> APIs where you'll be able to create or retrieve an API key.

This example displays the key

decafbaddeadbeef. Your API key will be different. Make a note of this key somewhere.

Adding Datadog as a logging endpoint

After you've created a Datadog account and noted your Datadog API key, follow the steps below to add Datadog as a logging endpoint for Fastly services.

- CDN

- Compute

- Review the information in our guide to setting up remote log streaming.

- In the Datadog area, click Create endpoint.

- Fill out the Create a Datadog endpoint fields as follows:

- In the Name field, enter a human-readable name for the endpoint.

- In the Placement area, select where the logging call should be placed in the generated VCL. Valid values are Format Version Default, waf_debug (waf_debug_log), and None. Read our guide on changing log placement for more information.

- In the Log format field, enter the data to send to Datadog. We've described the use of this format below with additional suggestions.

- From the Region menu, select the region to stream logs to.

- In the API key field, enter the API key of your Datadog account.

- Click Create to create the new logging endpoint.

- Click Activate to deploy your configuration changes.

Logs should begin appearing in your Datadog account a few seconds after you've created the endpoint and deployed your service changes. These logs can then be accessed via the Datadog Log Explorer on your Datadog account.

Using the JSON logging format

Data sent to Datadog must be serialized as a JSON object. Datadog automatically parses log files created as JSON, and is able to recognize several reserved fields, such as service, host, and date.

NOTE

The JSON in this example is formatted for ease of reading. For proper parsing, it must be added as a single line in the Log format field, removing all line breaks and indentation whitespace first.

For example, in the JSON below we've set service to the ID of the Fastly service that sent the log but you could also use a human-readable name or you could group all logs under a common name such as fastly.

1{2 "ddsource": "fastly",3 "service": "%{req.service_id}V",4 "date": "%{begin:%Y-%m-%dT%H:%M:%S%z}t",5 "time_start": "%{begin:%Y-%m-%dT%H:%M:%S%Z}t",6 "time_end": "%{end:%Y-%m-%dT%H:%M:%S%Z}t",7 "http": {8 "request_time_ms": %{time.elapsed.msec}V,9 "method": "%m",10 "url": "%{json.escape(req.url)}V",11 "useragent": "%{User-Agent}i",12 "referer": "%{Referer}i",13 "protocol": "%H",14 "request_x_forwarded_for": "%{X-Forwarded-For}i",15 "status_code": "%s"16 },17 "network": {18 "client": {19 "ip": "%h",20 "name": "%{client.as.name}V",21 "number": "%{client.as.number}V",22 "connection_speed": "%{client.geo.conn_speed}V"23 },24 "destination": {25 "ip": "%A"26 },27 "geoip": {28 "geo_city": "%{client.geo.city.utf8}V",29 "geo_country_code": "%{client.geo.country_code}V",30 "geo_continent_code": "%{client.geo.continent_code}V",31 "geo_region": "%{client.geo.region}V"32 },33 "bytes_written": %B,34 "bytes_read": %{req.body_bytes_read}V35 },36 "host":"%{if(req.http.Fastly-Orig-Host, req.http.Fastly-Orig-Host, req.http.Host)}V",37 "origin_host": "%v",38 "is_ipv6": %{if(req.is_ipv6, "true", "false")}V,39 "is_tls": %{if(req.is_ssl, "true", "false")}V,40 "tls_client_protocol": "%{json.escape(tls.client.protocol)}V",41 "tls_client_servername": "%{json.escape(tls.client.servername)}V",42 "tls_client_cipher": "%{json.escape(tls.client.cipher)}V",43 "tls_client_cipher_sha": "%{json.escape(tls.client.ciphers_sha)}V",44 "tls_client_tlsexts_sha": "%{json.escape(tls.client.tlsexts_sha)}V",45 "is_h2": %{if(fastly_info.is_h2, "true", "false")}V,46 "is_h2_push": %{if(fastly_info.h2.is_push, "true", "false")}V,47 "h2_stream_id": "%{fastly_info.h2.stream_id}V",48 "request_accept_content": "%{Accept}i",49 "request_accept_language": "%{Accept-Language}i",50 "request_accept_encoding": "%{Accept-Encoding}i",51 "request_accept_charset": "%{Accept-Charset}i",52 "request_connection": "%{Connection}i",53 "request_dnt": "%{DNT}i",54 "request_forwarded": "%{Forwarded}i",55 "request_via": "%{Via}i",56 "request_cache_control": "%{Cache-Control}i",57 "request_x_requested_with": "%{X-Requested-With}i",58 "request_x_att_device_id": "%{X-ATT-Device-Id}i",59 "content_type": "%{Content-Type}o",60 "is_cacheable": %{if(fastly_info.state~"^(HIT|MISS)$", "true","false")}V,61 "response_age": "%{Age}o",62 "response_cache_control": "%{Cache-Control}o",63 "response_expires": "%{Expires}o",64 "response_last_modified": "%{Last-Modified}o",65 "response_tsv": "%{TSV}o",66 "server_datacenter": "%{server.datacenter}V",67 "req_header_size": %{req.header_bytes_read}V,68 "resp_header_size": %{resp.header_bytes_written}V,69 "socket_cwnd": %{client.socket.cwnd}V,70 "socket_nexthop": "%{client.socket.nexthop}V",71 "socket_tcpi_rcv_mss": %{client.socket.tcpi_rcv_mss}V,72 "socket_tcpi_snd_mss": %{client.socket.tcpi_snd_mss}V,73 "socket_tcpi_rtt": %{client.socket.tcpi_rtt}V,74 "socket_tcpi_rttvar": %{client.socket.tcpi_rttvar}V,75 "socket_tcpi_rcv_rtt": %{client.socket.tcpi_rcv_rtt}V,76 "socket_tcpi_rcv_space": %{client.socket.tcpi_rcv_space}V,77 "socket_tcpi_last_data_sent": %{client.socket.tcpi_last_data_sent}V,78 "socket_tcpi_total_retrans": %{client.socket.tcpi_total_retrans}V,79 "socket_tcpi_delta_retrans": %{client.socket.tcpi_delta_retrans}V,80 "socket_ploss": %{client.socket.ploss}V81}Do not use this form to send sensitive information. If you need assistance, contact support. This form is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.